Experiments

Simulation Results

In-domain and out-of-domain IL Results on FrankaKitchen. We report the mean and standard deviation of success rate (full-stage completion) and the percentage of the completion (out of 4 stages), evaluated over diverse existing pretrained visual representations trained by GCBC with three seeds. Highlighted scores represent improvements in out-of-domain evaluations and in-domain results with gains exceeding 0.01.

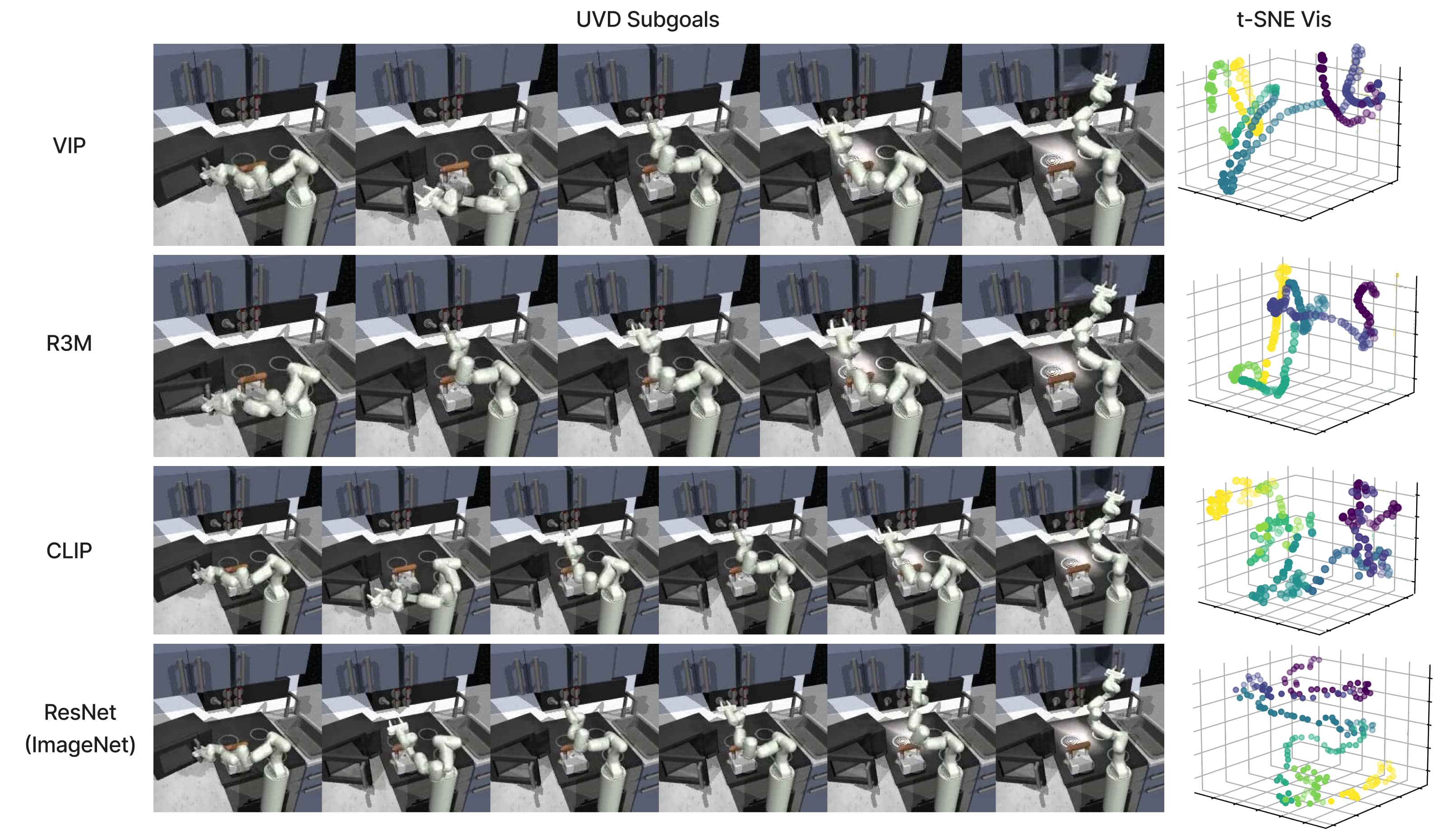

Next, we visualize the qualititive results for one of the task in FrankaKitchen: open the microwave, turn on the bottom burner, toggle the light switch, and slide the cabinet. We compare the decomposition results with different frozen visual backbones, as well as 3D t-SNE visualizations (colors are labeled by each subgoal). Representations pretrained with temporal objectives like VIP and R3M provide more smooth, continuous, and monotone clusters in feature space than others, whereas the ResNet trained for supervised classification on ImageNet-1k provide the most sparse embeddings.

UVD Decomposition Results in Simulation

GCBC ❌

GCBC + UVD

GCRL ❌

Real Robot Results

In-domain evaluation. For real-world applications, we've tested UVD on three multistage tasks: placing an apple in an oven and close the oven ($\texttt{Apple-in-Oven}$), pouring fries then place on a rack ($\texttt{Fries-and-Rack}$), and folding a cloth ($\texttt{Fold-Cloth}$). The corresponding videos show how we break down these tasks into semantically meaningful sub-goals. Two successful and one failed rollouts on these three tasks. All videos for real robot experiments are 2x speed up.

$\texttt{Apple-in-Oven}$

UVD Decomposition Results

❌

$\texttt{Fries-and-Rack}$

UVD Decomposition Results

❌

$\texttt{Fold-Cloth}$

UVD Decomposition Results

❌

Compositional Generalization. We evaluate UVD's ability to generalize compositionally by introducing unseen initial states for these tasks. While methods like GCBC fail (first row) under these circumstances, GCBC + UVD (second row) successfully adapts.